MoRAgent: Parameter Efficient Agent Tuning with Mixture-of-Roles

Abstract

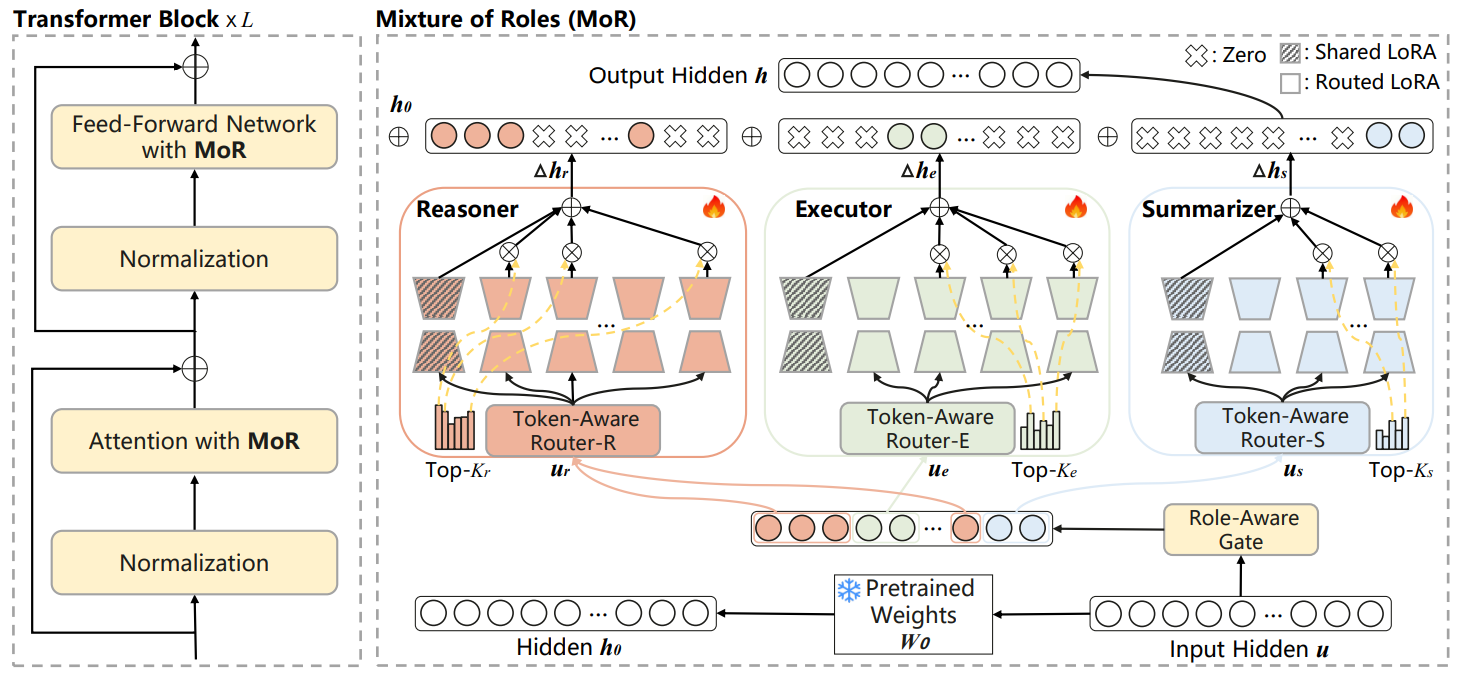

Despite recent advancements of fine-tuning opensource large language models (LLMs) to facilitate agent tasks, parameter-efficient fine-tuning (PEFT) methodologies for agent remain largely unexplored. In this paper, we introduce three key strategies for PEFT in agent tasks: 1) Inspired by the increasingly dominant Reason+Action paradigm, we first decompose the capabilities necessary for the agent tasks into three distinct roles: reasoner, executor, and summarizer. The reasoner is responsible for comprehending the user’s query and determining the next role based on the execution trajectory. The executor is tasked with identifying the appropriate functions and parameters to invoke. The summarizer conveys the distilled information from conversations back to the user. 2) We then propose the Mixture-of-Roles (MoR) framework, which comprises three specialized Low-Rank Adaptation (LoRA) groups, each designated to fulfill a distinct role. By focusing on their respective specialized capabilities and engaging in collaborative interactions, these LoRAs collectively accomplish the overall agent task. 3) To effectively fine-tune the framework, we develop a multi-role data generation pipeline based on publicly available datasets, incorporating rolespecific content completion and reliability verification. We conduct extensive experiments and thorough ablation studies on various LLMs and agent benchmarks, demonstrating the effectiveness of the proposed method.

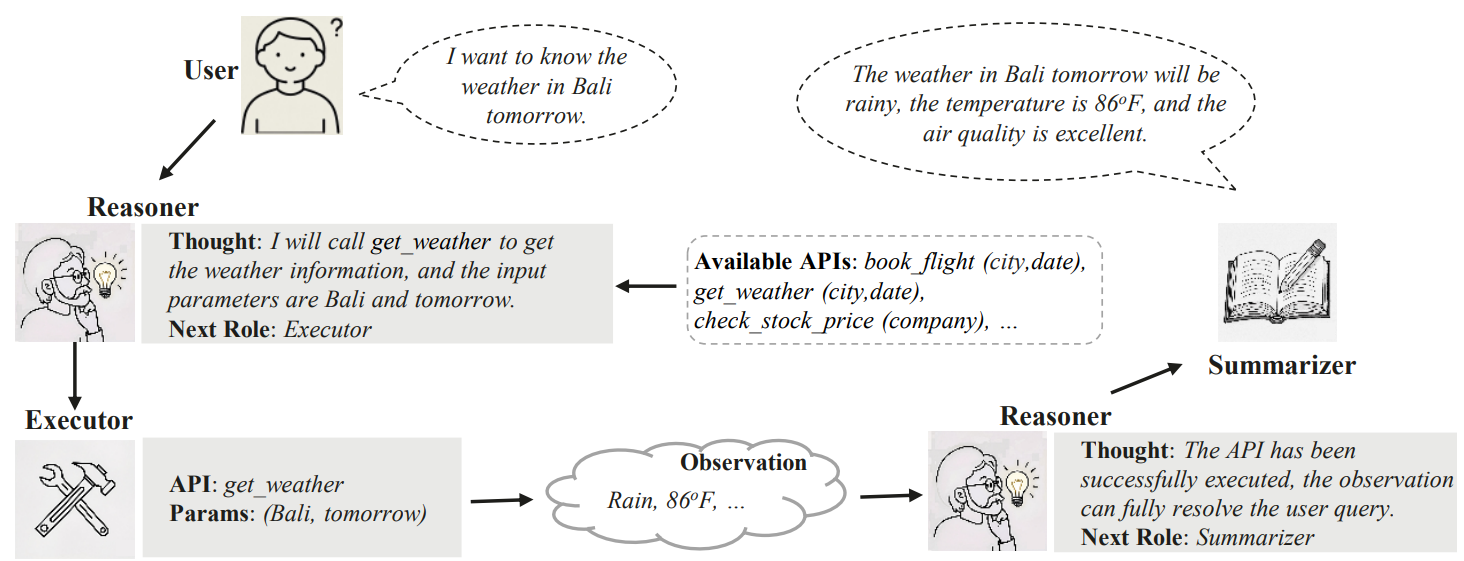

Workflow example of multiple roles collaborate to accomplish one agent task.

The framework of our method. The capabilities necessary for agent are decomposed into three distinct roles: reasoner, executor, and summarizer. Each role consists of different number of LoRAs according to their learning difficulty. The rule-based role-aware gate and learnable token-aware routers are introduced to more reasonably allocate LoRAs.

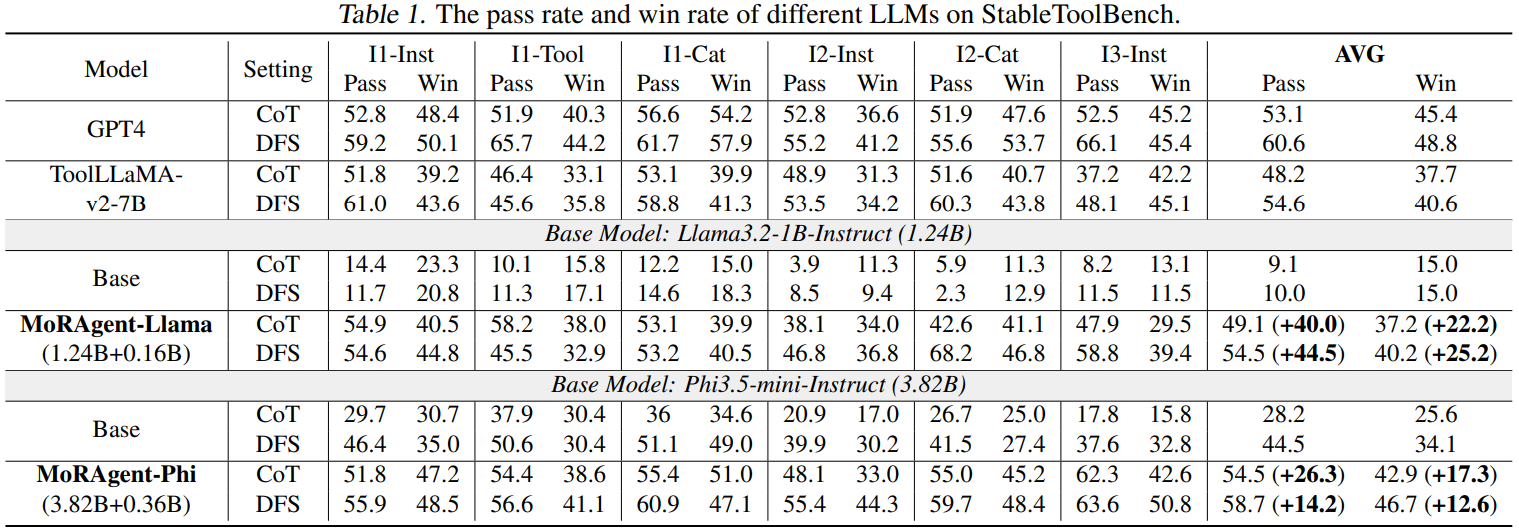

The pass rate and win rate of different LLMs on StableToolBench.

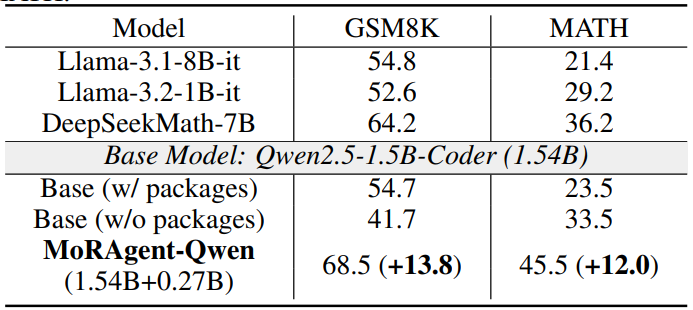

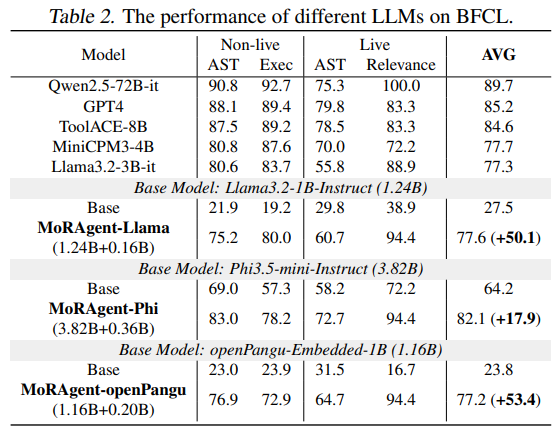

The performance of different LLMs on BFCL.